Logic Regression Model

Cost Function

We cannot use the same cost function, that we use for linear regression, because the Logistic Regression will cause the output to be wavy, causing many local optimas. In other words, it won’t be a convex function.

Instead, our cost function for logistic regression looks like:

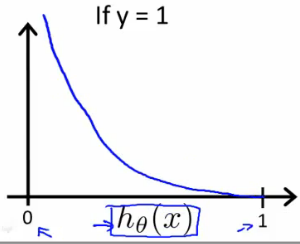

When $y = 1$, we get the following plot for $J(\theta)$ vs $h_{\theta}(x)$:

Similarly, when $y = 0$, we get the following plot for $J(\theta)$ vs $h_{\theta}(x)$:

If our correct answer $y$ is $0$, the the cost function will be $0$ if our hypothesis function also outputs $0$. If our hypothesis approaches $1$, then the cost function will approach infinity.

If our correct answer $y$ is $1$, the the cost function will be $0$ if our hypothesis function outputs $1$. If our hypothesis approaches $0$, then the cost function will approach infinity.

Note that writing the cost function in this way, guarantees that $J(\theta)$ is convex for logistic regression.

Simplified Cost Function and Gradient Descent

We can compress our cost funtion’s two conditional cases into one case:

Notice that when $y$ is equal to $1$, then the second term $(1 - y) \text{log}(1 - h_{\theta}(x))$ will be zero and will not affect the result. If $y$ is equal to $0$, then the first term $-y\text{log}(h_{\theta}(x))$ will be zero and will not affect the result.

We can fully write out out entire cost function as follows:

A vectorized implementation is:

Gradient Descent

Remember that the general form of gradient descent is:

We can work out the derivative part using calculus to get:

Notice that this algorithm is identical to the one we used in linear regression. We still have to simultaneously update all values in theta.

A vectoriazed implementation is:

Advanced Optimization

“Conjugate gradient”, “BFGS” and “L-BFGS” are more sophisticated, faster ways to optimize $\theta$, that can be used instead of gradient descent. It’s suggested that one should not write these sophisticated algorithms theirself (unless they’re an expert in numerical computing), but use the libraries instead, as they’re already tested and highly optimized.

First, we need to provide a function that evaluates the following two functions for a given input value $\theta$: